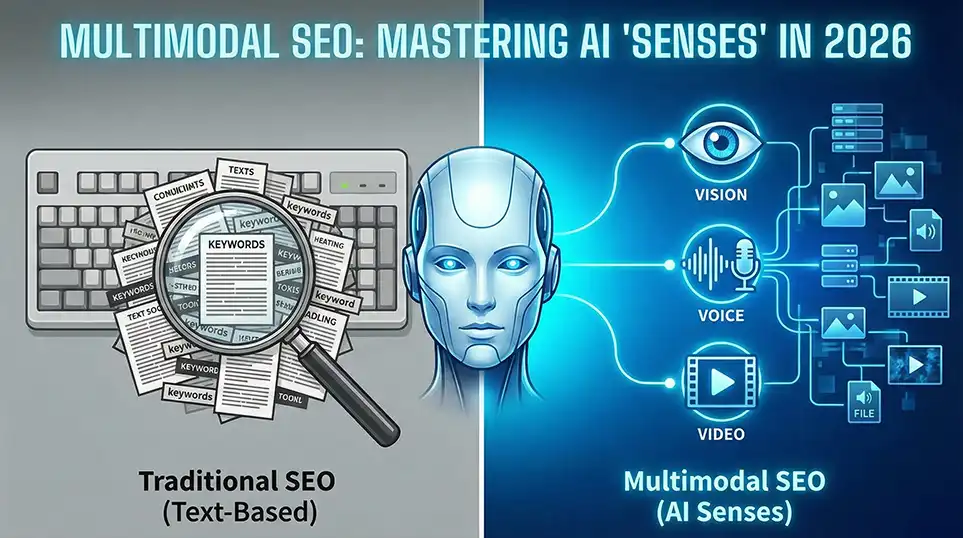

We have entered the “Sensory Era” of search.

For twenty years, SEO was a game of words. You typed text into a box; Google gave you text back. But in 2026, the primary interface for the internet is no longer a keyboard—it is a camera, a microphone, and a screen recording.

Multimodal SEO is the practice of optimizing your content for the AI’s “senses.”

With the explosion of models like GPT-4o and Google Gemini, AI can now “see” your product images, “hear” your podcast tonality, and “watch” your video demonstrations to understand context. If your brand only exists in text, you are effectively invisible to the modern searcher.

To survive, you must evolve from “Keywords” to “Signals.” This guide outlines the 7 critical steps to build a Multimodal SEO strategy that ranks in the eyes and ears of AI.

1. The Death of “Text-Only” Indexing

To understand Multimodal SEO, we must first understand how the index has changed.

Traditionally, Google used “OCR” (Optical Character Recognition) to read text inside images. It was clumsy. Today, AI uses Computer Vision and Vector Embeddings. It doesn’t just read the text “Red Shoes”; it identifies the concept of a red shoe, the material, the style, and the occasion, even if the words “Red Shoe” never appear on the page.

This shift means your images and videos are no longer just “eye candy”—they are data containers. Your goal is to ensure these containers are packed with the right semantic signals.

2. Optimizing for “Computer Vision” (The AI Eye)

Visual search is the fastest-growing segment of the search economy, driven by tools like Google Lens and Apple Visual Intelligence. When a user points their phone at a broken part and asks, “Where can I buy this?”, your image needs to be the answer.

Beyond Alt Text: “Scene Context”

Standard SEO taught you to write Alt Text like: alt="Blue running shoes". Multimodal SEO requires you to describe the scene and relationship, because that is what the AI is looking for.

- Legacy SEO:

alt="Man drinking coffee" - Multimodal SEO:

alt="Young professional drinking artisan roast coffee in a minimalist coworking space, emphasizing productivity."

This extra context helps the AI match your image to complex queries like “aesthetic coffee shops for working remote.”

High-Fidelity Object Isolation

AI models prefer clear, uncluttered visual data. If you sell products, ensure you have:

- Isolated Product Renders: Images with white or transparent backgrounds (easier for the AI to “segment” and identify).

- Contextual Lifestyle Shots: Images showing the product in use (helps the AI understand scale and application).

3. “Sonic SEO”: Ranking for Voice and Audio

Voice search in 2026 isn’t just about “keywords” or “smart speakers.” It is about Conversational Interruption. Users are having back-and-forth dialogues with AI agents.

To optimize for this, your content must sound natural when read aloud.

The “Speakable” Schema

You must explicitly tell Google which parts of your content are fit for audio playback. Use the Speakable schema markup.

JSON

{

"@context": "http://schema.org",

"@type": "WebPage",

"speakable": {

"@type": "SpeakableSpecification",

"cssSelector": ["#summary", ".key-takeaway"]

}

}

This code invites the AI assistant to read your specific summary section when a user asks a question, rather than fumbling through your entire article.

Phonetic Optimization

Multimodal SEO also considers how your brand names and keywords sound. If your product name is “Xylophone,” but users pronounce it “Zylophone,” ensure your content (and audio transcripts) reflects the phonetic reality. Mispronunciation is a major cause of “Voice Search Misses.”

4. Video Parsing: The “Frame-by-Frame” Index

Video is the “Heavyweight Champion” of Multimodal SEO. AI models now parse video frame-by-frame. They don’t need your title; they watch the video content itself.

If you have a video on “How to fix a sink,” the AI can identify the exact second you pick up the wrench.

Chapter Segmentation is Mandatory

You must break your video into clear, labeled chapters.

- 0:00 – Diagnosis

- 1:30 – Tools Needed

- 3:45 – Removing the P-Trap

These timestamps act as “Deep Links.” An AI agent can direct a user specifically to 3:45 if they ask, “How do I remove the P-Trap?” skipping the rest. Without chapters, your video is a “black box” that the AI might ignore.

This aligns with our Action Search strategy, where facilitating the user’s immediate goal is the priority.

5. The “Vector Database” Advantage

This is the technical backbone of Multimodal SEO.

Modern search engines store data as “Vectors”—mathematical coordinates. Text, images, and audio are all converted into numbers in the same 3D space. “Apple” (the word), a picture of an apple, and the sound of a crunch are all stored close together in this vector space.

How to Leverage This?

You cannot “edit” your vectors directly, but you can create Multimodal Clusters.

- Don’t just write a blog post.

- Embed a video on the exact same topic.

- Add an infographic with the exact same data.

By reinforcing the same concept across three different modalities (Text, Video, Image), you create a “dense” vector signal. The AI becomes highly confident that your URL is the definitive source for that topic.

6. Entity Identity in a Multimodal World

As we discussed in Reasoning Engine Optimization, trust is paramount. In Multimodal SEO, your visual identity helps establish that trust.

- Consistent Visual Branding: Use the same logo, color palette, and font across your website, YouTube thumbnails, and social media. The AI “sees” this consistency and attributes “Brand Entity Authority.”

- Face Recognition for Authors: Use consistent headshots for your authors. If “Jane Doe” is an expert, the AI should be able to recognize her face in a video, a bio photo, and a conference lineup. This links her “Visual Identity” to her “Textual Expertise.”

7. Measuring Multimodal Success

How do you track this? “Clicks” are the wrong metric.

- Google Lens Traffic: Check your Google Search Console under “Search Appearance” for “Google Lens” or “Visual Search” filters.

- Voice Impressions: While harder to track, look for “Question Keywords” (Who, What, Where) in your analytics that result in high “Time on Page” but low “Scroll Depth”—this often indicates a user listened to an answer.

- Video Key Moments: In YouTube Analytics, track which “Key Moments” are being clicked. These are your most valuable “Answer Tokens.”

Conclusion: The Whole-Brain Strategy

Multimodal SEO is not about doing more work; it is about doing holistic work.

In 2026, you cannot afford to be just a “text” company. You must be a “media” company. The AI agents searching the web are simulating human senses. They want to see, hear, and understand.

If you feed them rich, structured, and diverse data, they will reward you with the ultimate prize: being the “Universal Answer” across every device.

Recommended External Resource

To see the technical side of how AI parses content, I recommend watching this breakdown on Optimizing Content for AI Overviews. It specifically touches on vector-based analysis which is the core of Multimodal SEO.

… How to Optimize for AI Overviews …

This video is relevant because it explains the “vector-based” analysis that underpins how AI connects text, images, and concepts in the modern search index.

What Can You Do Next?

Multimodal SEO starts with a visual audit. You likely have hundreds of images that are effectively “blank” to an AI.

Would you like me to analyze an image from your website (if you describe it) and write a “Computer Vision Optimized” Alt Text and filename for it?

Related Service

- SEO Company USA — Boost national visibility with expert SEO strategies.

- Web Design & Development — Build fast, conversion-optimized websites that Google loves.

- PPC Marketing (Pay-Per-Click) — Drive immediate, high-intent traffic for quick ROI.

- E-commerce SEO — Drive targeted shopper traffic and maximize online store revenue.

- Social Media Advertising — Turn paid campaigns into high-converting customer funnels.