The power of AI isn’t just in polished web interfaces. The real, raw power for developers, data scientists, and system administrators is in automation, integration, and the command line. It’s the ability to pipe a server log into an AI and ask, “Find the critical error,” or to have an AI co-pilot that lives right in your terminal, ready to write code snippets on command.

But how do you bridge the gap between Google’s powerful Gemini models and your own terminal? For many, it can seem intimidating, a complex world of APIs, cloud projects, and authentication.

This is where a proper Gemini CLI setup comes in. It’s the key to unlocking true, personalized AI-powered automation.

While Google doesn’t offer a single, downloadable “Gemini CLI” tool, this guide will show you the official, professional way to build your own. We will use the Google AI SDK for Python to create a powerful command-line tool that can talk directly to the Gemini 1.5 Pro model.

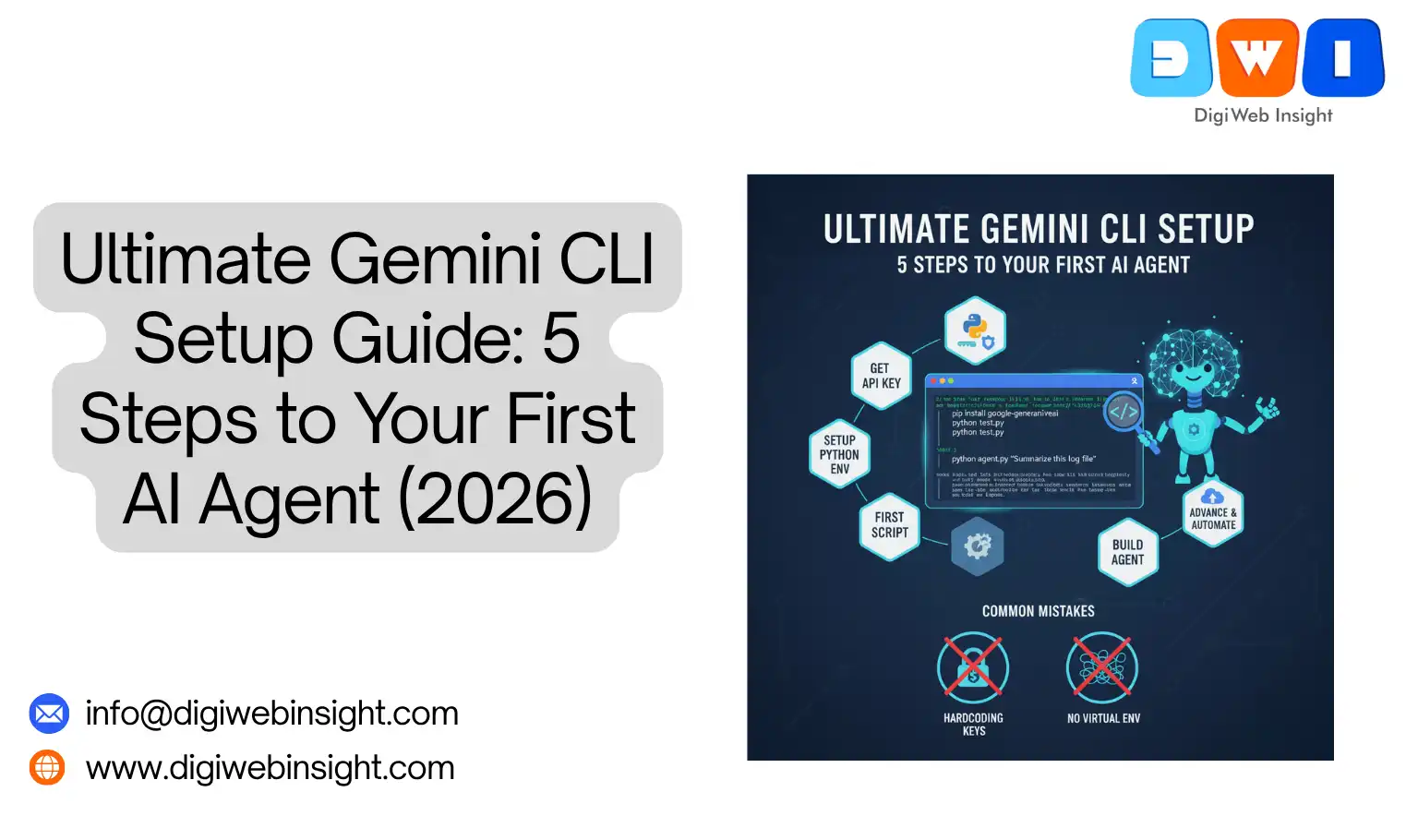

This guide will take you from zero to hero in five comprehensive steps: getting your non-negotiable API key, setting up a clean Python environment, installing the SDK, writing your first “Hello, World” script, and finally, building a true “AI agent” that can take your instructions directly from your terminal.

Gemini CLI Setup (And Why You Need One)

First, let’s clarify our terms to set the right expectations. A “Gemini CLI” isn’t a single product you download from Google. It’s a project you build. It’s a script or application you write that allows you to interact with the powerful Gemini API directly from your command-line interface (CLI).

In this context, an “AI Agent” is a script that can autonomously perform tasks or respond to queries. Our “first agent” will be a simple but powerful Python script that can take your text prompt as a command-line argument, send it to Google, and print the AI’s response right back into your terminal.

But why not just use the web UI? The reasons are all about power and integration. A Gemini CLI setup gives you automation, allowing you to pipe data directly into your agent. For example, you could use a command like cat server.log | python agent.py "summarize these errors". It also unlocks integration, letting you call your AI agent from other scripts, like a Bash script that automates your daily reports. Finally, it provides speed; it’s often much faster for quick, text-based tasks than opening a browser, logging in, and clicking around.

This guide will focus on the fastest, most direct path to a working Gemini CLI setup: using the Google AI Python SDK and getting a free API key from Google AI Studio.

Step 1: Get Your API Key from Google AI Studio

This is the non-negotiable first step. You cannot interact with the API without an authentication key. While you can get one through a full Google Cloud project, the simplest method is via Google AI Studio.

Google AI Studio is a free, web-based tool for prototyping with Gemini models. It’s the perfect place to start.

To get your key, first, navigate to aistudio.google.com in your browser. You’ll need to sign in with your Google account. Once you’re in, look to the menu on the left and click the “Get API Key” button. From there, you’ll see an option to “Create API key in new project.” Give your project a name if you wish, and in a few seconds, your key will be generated. Copy this long string of letters and numbers immediately.

Now for the most crucial part: security. You must never hardcode this API key directly into your script. If you share your code or upload it to GitHub, your key will be stolen and abused in minutes.

The professional way to handle your key is by saving it as an environment variable. This makes the key available to your scripts without ever writing it on disk in your project folder.

For macOS or Linux, open your terminal and type: export GOOGLE_API_KEY='YOUR_API_KEY_HERE'

For Windows Command Prompt, type: setx GOOGLE_API_KEY "YOUR_API_KEY_HERE"

After running this, close and reopen your terminal. This command makes the key available for your current session. To make it permanent, you’ll need to add this line to your shell’s configuration file, such as .zshrc, .bash_profile, or by using the “Edit the system environment variables” panel in Windows.

Step 2: Install and Configure Your Python Environment

This is the core “install” portion of your Gemini CLI setup. We will use Python, as its Google AI SDK is mature and easy to use.

Why You MUST Use a Virtual Environment

Before you type pip install, it is critical that you create a virtual environment. This is a best practice that isolates your project’s dependencies (like the Google AI library) from your computer’s main Python installation. This prevents version conflicts between projects and keeps your system clean.

Creating Your Virtual Environment

First, navigate in your terminal to a folder where you want your new project to live.

On macOS or Linux, run these two commands: python3 -m venv gemini-cli-env source gemini-cli-env/bin/activate

On Windows, run these two commands: py -m venv gemini-cli-env .\gemini-cli-env\Scripts\activate

The first command creates a new directory (named gemini-cli-env) containing a self-contained copy of Python. The second command “activates” this environment. You’ll know it’s working because your terminal prompt will change to show (gemini-cli-env) at the beginning. You are now “inside” your safe, isolated sandbox.

Installing the Google AI SDK

Now for the magic. With your virtual environment active, run this single command: pip install google-generativeai

This will reach out to the Python Package Index, download the official Google library, and install it inside your gemini-cli-env environment. This package is the bridge that does all the heavy lifting of communicating between your Python script and the Gemini API.

Step 3: Your “Hello, World” – The First Gemini Script

You have your key. You have your environment. It’s time for the “Aha!” moment. Let’s write a simple script to prove that your entire Gemini CLI setup is working from end to end.

First, create a new file. In your terminal, type touch test.py (or echo. > test.py for Windows). Now, open this test.py file in your favorite text editor or IDE and type the following code.

The Full “Hello, World” Script

Python

import google.generativeai as genai

import os

import sys

print("Script started...")

try:

# Configure the API key

api_key = os.environ.get("GOOGLE_API_KEY")

if not api_key:

print("Error: GOOGLE_API_KEY environment variable not set.")

sys.exit(1)

genai.configure(api_key=api_key)

print("API key configured...")

# Create the model

# We use gemini-1.5-pro-latest for the best capability

model = genai.GenerativeModel('gemini-1.5-pro-latest')

print("Model created...")

# Generate content

prompt = "Explain the term 'Hello, World' in 3 sentences as if to a developer."

response = model.generate_content(prompt)

print("...Response received!")

# Print the text response

print("\n--- Gemini's Response ---")

print(response.text)

print("-------------------------")

except Exception as e:

print(f"An error occurred: {e}")

This script first imports the necessary libraries. It then safely reads your API key from the environment variables you set in Step 1. If it can’t find the key, it will print a helpful error. It then configures the genai library, selects the model (we’re using the powerful gemini-1.5-pro-latest), and sends our hardcoded prompt. Finally, it prints the text part of the response.

Running Your Script

Save the file and go back to your terminal (make sure your virtual environment is still active). Now, simply run: python test.py

If everything is configured correctly, you will see your own print statements execute one by one, followed by a short pause, and then… magically, AI-generated text will appear in your terminal. You have just completed a successful Gemini CLI setup and had your first conversation with the model.

Step 4: From Script to “First Agent” (Accepting Terminal Arguments)

This step fulfills the “First Agent” promise of the title. Our first script was great, but it’s not very useful since the prompt is hardcoded. Let’s create a new script that can accept a prompt from the command line, making it a true, reusable tool.

Create a new file named agent.py. Open it and enter the following code.

The “First Agent” Script (agent.py)

Python

import google.generativeai as genai

import os

import sys

def main():

try:

# 1. Configure the API Key

api_key = os.environ.get("GOOGLE_API_KEY")

if not api_key:

print("Error: GOOGLE_API_KEY environment variable not set.")

sys.exit(1)

genai.configure(api_key=api_key)

# 2. Check if a prompt was provided

# sys.argv is a list of all arguments.

# The script name is sys.argv[0]

if len(sys.argv) < 2:

print("Usage: python agent.py 'Your prompt here'")

sys.exit(1)

# 3. Join all arguments to form a single prompt

# This allows you to write a prompt with spaces

prompt = " ".join(sys.argv[1:])

print(f"--- Sending Prompt to Gemini --- \n{prompt}\n")

print("...Waiting for response...")

# 4. Create the model and generate content

model = genai.GenerativeModel('gemini-1.5-pro-latest')

response = model.generate_content(prompt)

# 5. Print the response

print("\n--- Gemini's Response ---")

print(response.text)

print("-------------------------")

except Exception as e:

print(f"An error occurred: {e}")

if __name__ == "__main__":

main()

This new script uses Python’s built-in sys module. sys.argv is a list that holds all the words typed on the command line. sys.argv[0] is the name of the script itself (agent.py), so we check if the list has fewer than 2 items. If it does, we print a help message and exit.

The clever part is prompt = " ".join(sys.argv[1:]). This takes all arguments after the script name and joins them together into a single string, allowing you to write prompts with spaces.

How to Use Your New Gemini CLI Agent

Save this new agent.py file. Now, back in your terminal, you can run it like this:

python agent.py "What is the capital of France?"

After a moment, you’ll see it send your prompt and then print the response. Try something more complex:

python agent.py "Write a 3-line python script to list all files in the current directory"

You have now built a truly functional and reusable Gemini CLI setup that acts as your AI co-pilot.

[Read our guide on Python's sys vs. argparse for better CLI tools]

Step 5: Advancing Your Gemini CLI Agent (Next Steps)

What you’ve built is just the beginning. The real power comes from expanding on this simple agent. Here are a few ways to make your Gemini CLI setup even more powerful.

Making it Conversational (Adding Chat History)

Right now, your agent is single-turn. It forgets everything after each command. To make it a persistent chatbot, you can use Gemini’s chat functionality. Instead of model.generate_content(), you would first initialize a chat with model.start_chat().

You could create a new script, chat.py, that starts a while True: loop. Inside the loop, it would take user input using prompt = input("> "), send it using response = chat.send_message(prompt), and then print response.text. This creates a continuous chat session that remembers the context of your conversation, all within your terminal.

Handling Files with Gemini 1.5 Pro

This is where your agent becomes truly next-level. Gemini 1.5 Pro has a massive context window and can analyze files. The Python SDK makes this easy. You can upload a file with a command like sample_file = genai.upload_file(path="my_doc.pdf").

Then, you can send both the file and a prompt to the model: response = model.generate_content([sample_file, "Summarize this document."])

Imagine the possibilities for your Gemini CLI setup. You could create an agent that takes a filename as an argument (e.g., python agent.py "my_code.py" "find any bugs in this code") and get a full code review. This is where the command line truly shines. For a deep dive, check out the official Google AI Python SDK documentation.

Making it a “Real” Shell Command (Advanced)

For a final professional touch, you can make your agent.py script executable so you don’t have to type python every time. By adding a “shebang” line (#!/usr/bin/env python3) to the very top of the file and making it executable with chmod +x agent.py, you can run it directly with ./agent.py "My prompt".

If you then add your project’s directory to your system’s PATH, you could run your agent from anywhere in your terminal, just like a native command.

Conclusion: Your Terminal is Now Your AI Co-pilot

Congratulations. You have successfully completed a full Gemini CLI setup. You’ve gone from a simple idea to a working, powerful AI tool.

You didn’t just install a tool; you built one. In the process, you’ve configured your API key securely, set up a professional and isolated Python environment, installed the official SDK, and written scripts that can handle both single-turn prompts and, with the next steps, full conversations and file analysis.

The power is now in your hands. You can integrate this agent into your deployment scripts, use it to analyze data, or simply have it help you write code and answer questions. Your terminal is now a direct line to one of the most powerful AI models on the planet.

Related Service

- SEO Company USA — Boost national visibility with expert SEO strategies.

- Web Design & Development — Build fast, conversion-optimized websites that Google loves.